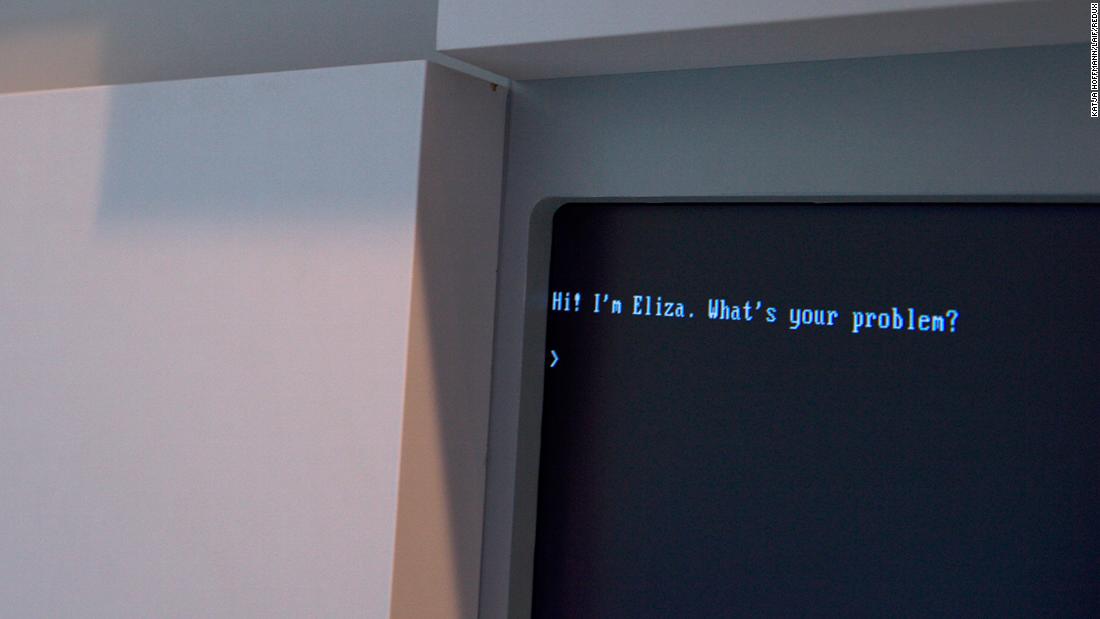

For Weizenbaum, that fact was cause for concern, according to a 2008 MIT obituary. The people who interacted with Eliza were willing and open, even though they knew it was her program on the computer. “ELIZA shows how easy it is to create and maintain the illusion of understanding, and thus the illusion of judgment. Weizenbaum wrote in 1966, “There is a certain danger lurking there.” became a critic.

Even before then, the complex relationship between artificial intelligence and machines and humans was evident in the plots of Hollywood movies such as She and Ex Machina. Not to mention harmless arguments with people who insist on saying “thank you” to their voice assistants. Alexa or Siri.

Others warn that the technology behind AI-powered chatbots remains far more limited than some would like. “These technologies are very good at pretending to be human and sounding human, but they’re not deep,” said Gary Marcus, an AI researcher and professor emeritus at New York University. The system is an imitator, but a very superficial imitator. They don’t really understand what they’re talking about.”

Yet, as these services expand into every corner of our lives and companies take steps to make these tools more personalized, our relationship with them can become more complicated as well.

Evolution of chatbots

Sanjeev P. Khudanpur remembers chatting with Eliza in graduate school. Despite its historical importance in the tech industry, it didn’t take long to understand its limitations, he said.

It could only convincingly imitate about a dozen text conversation exchanges, but “No, it’s not really smart. It’s just trying to prolong the conversation in some way,” Khudanpur said. Told. Application of information-theoretic methods to human language technology and professor at Johns Hopkins University.

However, in the decades that followed these tools, there was a shift away from the idea of ”talking to a computer.” “Because the problem turned out to be very difficult,” said Khudanpur. Instead, he said, the focus has shifted to “goal-oriented dialogue.”

To understand the difference, think of a conversation with Alexa or Siri. Typically, these digital assistants are asked to help with buying tickets, checking the weather, playing songs, and more. It was a goal-oriented dialogue that became a major focus of academic and industry research as computer scientists sought to glean useful things from computers’ ability to scan human language.

Using technology similar to previous social chatbots, Khudanpur said:

There was a decades-long “lull” in the technology before the Internet became widespread, he added. “Maybe this millennium was the big breakthrough,” Kudanpur said. “With the rise of companies that successfully employ things like computerized agents to perform routine tasks.”

“People are always upset when their bags go missing. The human agents who deal with them are always stressed out by all the negativity. That’s why they said, ‘Let the computer do it.’ is,” said Khudanpur. “You can yell at the computer, but all the computer wants to know is, ‘Give me your tag number so I can tell you where my bag is.'”

Back to social chatbots and social issues

In the early 2000s, researchers began revisiting the development of social chatbots capable of conducting long conversations with humans. These chatbots have been trained on large amounts of data, often from the internet, and have learned to mimic the way humans speak very well, but they also risk reflecting the worst of the internet. did.

“The more you chat with Tay, the smarter she gets, making the experience more personalized,” Microsoft said at the time.

This refrain will be repeated by other tech giants who have released public chatbots, including Meta’s BlenderBot3 released earlier this month. The metachatbot falsely claimed, among other controversial statements, that Donald Trump is still president and there is “absolutely a lot of evidence” that the election was stolen.

BlenderBot3 professed to be more than a bot.. In one conversation, he claimed that “the fact that I am alive and conscious is what makes me human.”

Despite all the progress since Eliza and the large amount of new data to train these language processing programs, NYU professor Marcus said, “It is clear whether we can really build reliable and secure chatbots. It’s not.”

Kudanpur, on the other hand, is optimistic about potential use cases. “I have a big picture of how AI empowers humans on an individual level,” he said. “Imagine if my bot could read all the scientific papers in my field, I wouldn’t have to go read them all, I could simply think, ask questions, and conduct dialogue. please,” he said. “In other words, I will have my alter-ego with complementary psychic powers.”

Source: www.cnn.com